Command to Dump data from Linux to HDFS

Step 1. Upload File to HDFS

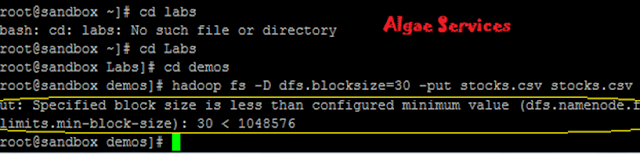

1. Try putting this file into HDFS with a block size of 30 bytes using below command .

2. Command: hadoop fs -D dfs.blocksize=30 -put stocks.csv stocks.csv

Error: put. Specified is less than configured minimum value (dfs.namenode.fs-limits.min-block-size):30<1048576

4. Command: hadoop fs -D dfs.blocksize=2000000 -put stocks.csv stocks.csv

Notice: 2,000,000 is not a valid block size because it is not a multiple of 512 (the checksum size)

8. Now to verify if data actually stored in hdfs, we will use"ls" command

11. Do I really need to mention block size every time? No we don't need to. Just use below command

- Command: hadoop fs -put

stocks.csv stocks1.csv

Step 2. View The No. of Blocks

1. Run the below command to

view no. of blocks created for our file stocks.csv.

2. Command: hdfs fsck /user/root/stocks.csv

3. If you have notice we have 4 blocks with block

size 903299 Byte

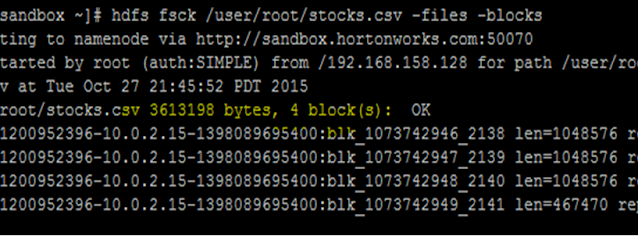

Step 3. Find Actual Blocks

1. Enter the fsck command as

earlier along with -files and -block options.

2. Command: hdfs fsck /user/root/stocks.csv -files -blocks

3. Output contains block id's, which coincidentally are the name of the files on the data nodes.

(Note: IP Address for

your system can be different)

4. Here check the filezilla in

Linux VM path "/hadoop/hdfs/data/current/BP-1200952396-10.0.2.15-1398089695400/current/finalized/subdir54

5. Now we will see the content

of blocks using tail command

6. Command: tail

/hadoop/hdfs/data/current/BP-1200952396-10.0.2.15-1398089695400/current/finalized/subdir54/blk_1073742589

7. If you check path in screenshot in Filezilla, There are 4 blocks. Three of them are of same size i.e. 1048576 and 4th is 467470 bytes.

8. Select the sandbox instance and click the Play virtual machine icon at right bottom corner.

9. The VM will start, which may take several minutes. Once the VM startup is complete, the console should look like the following

No comments:

Write commentsPlease do not enter spam links