Use PIG Language to navigate through HDFS and explore dataset

Step 1: View the Raw Data in my Linux machine

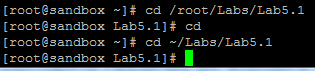

- Change the directories to /root/Labs/Lab5.1

- Command: "cd /root/Labs/Lab5.1"

- List the files in current folder

- Command: "ls'

- Unzip the archive in the Lab5.1 folder, which contains a file named whitehouse_visits.txt.

- Command: "unzip whitehouse_visits.txt"

- View the contents of this file

- Command: tail whitehouse_visits.txt

Now you will be able to the content of the file

Step 2: Load the Data into HDFS

- Start the gruntshell

- Command: pig

- Make a new directory name whitehouse in hdfs.

- Command: grunt> mkdir whitehouse

- Use copyFromLocal to copy the whitehouse_visits.txt file into the whitehouse folder in hdfs and rename file to visits.txt.

- Command: grunt> copyfromLocal /root/Labs/Lab5.1/whitehouse_visits.txt whitehouse/visits.txt

- Verify the file uploaded successfully using ls command.

- Command: grunt> ls whitehouse

Step 3. Define a Relation

- You will use TextLoader to load the visits.txt file.

- TextLoader simply creates a tuple for each line of text. and it uses a single chararray field that contains the entire line.

- It allows you to load lines of text and not to worry about the format or schema yet.

- Define the following Load relation:

- Command: grunt> A1 = LOAD 'user/root/whitehouse/' USING TextLoader();

- Use Describe to notice that A does not have schema

- Command: grunt > Describe A

- To get sense of what data will look like. Use the Limit Operators to define a new relation named A_Limit that is limited to 10 records of A

- Command: grunt> A_Limit = LIMIT A 10

Step 4: View the Records

- Use the DUMP operator to view the A_Limit relation.

- Command: grunt> DUMP A_Limit;

- Here we will get 10 Arbitary rows from vist.txt..

No comments:

Write commentsPlease do not enter spam links