Auto-scaling in Google Cloud DataProc

Google cloud DataProc most powerful feature is its ability to Auto-scale.

- This allows you to create relatively lightweight clusters and have them automatically scale up to the demands of a job, then scale back down again, when the job is complete.

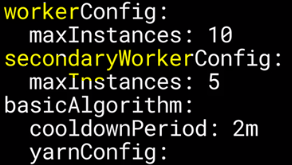

- Auto-scaling policies are written in YAML, and contain the configuration for the number of primary workers, as well as secondary or preemptible workers.

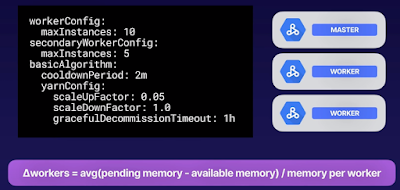

- The basic auto-scaling algorithm operates on YARN memory metrics, to determine when to adjust the size of the cluster. After every cooldown period elapses, the optimal number of workers is evaluated using this formula.

- If a worker has a high level of pending memory, it probably has more work to do to finish task. But if it has a high level of available memory, it's possible that the worker is no longer required and could be scaled down again.

- The scale-up and scale-down factors are used to calculate how many workers to add or remove if the number needs to change.

- The graceful Decommission Timeout is specific to YARN and sets a timeout limit for removing a node if it's still processing a task. This is a kind of failsafe, so you don't keep removing workers if they're still busy.

When to Avoid Auto-scaling in DataProc

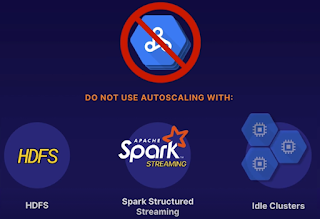

- There are some times when you should not use DataProc Auto-scaling.

- You ideally shouldn't use HDFS storage when using Auto-scaling. Use Cloud Storage instead.

- If you are using Auto-scaling and HDFS, you need to make sure that you have enough primary workers

- to store all of your HDFS data, so it doesn't become corrupted by a scale-down operation.

- Spark Structured Streaming is also not supported by Auto-scaling. You shouldn't rely on Auto-scaling to maintain idle clusters.

- If your cluster is likely to be idle for periods of time, don't rely on Auto-scaling to clean up resources for you.

- Just delete the idle cluster, and create a new one the next time you need to run a job.

No comments:

Write commentsPlease do not enter spam links