Hadoop Ecosystem: Hadoop Tools for Crunching Big Data

Being in the age where data is generated at a rapid and uncontrolled rate and seems to be just the double of what was generated a day prior, we can easily coin it as the BIG DATA.

More than structured information stored neatly in rows and columns, Big Data actually comes in complex, unstructured formats. However, in order to extract valuable information from Big Data, any organization will need to rely on technologies that enable a scalable, accurate, and powerful analysis of the data. Google being one of the oldest in this field came up with scalable frameworks like Map-Reduce and Google File System. Later, Apache open source initiative was started under the name Hadoop. Apache Hadoop is a framework that allows for the distributed processing of large data sets across machines.

Apache™ Hadoop® is an open source, Java-based framework that's designed to process huge amounts of data in a distributed computing environment. Doug Cutting and Mike Cafarella developed Hadoop, which was released in 2005.

Hadoop Ecosystem is neither a programming language nor a service, it is a platform or framework which solves big data problems. You can consider it as a suite which encompasses a number of services (ingesting, storing, analyzing and maintaining) inside it.

Below are the Hadoop components that together form a Hadoop ecosystem:

- HDFS -> Hadoop Distributed File System

- YARN -> Yet Another Resource Negotiator

- MapReduce -> Data processing using programming

- Spark -> In-memory Data Processing

- PIG, HIVE-> Data Processing Services using Query (SQL-like)

- HBase -> NoSQL Database

- Mahout, Spark MLlib -> Machine Learning

- Apache Drill -> SQL on Hadoop

- Zookeeper -> Managing Cluster

- Oozie -> Job Scheduling

- Flume, Sqoop -> Data Ingesting Services

- Solr & Lucene -> Searching & Indexing

- Ambari -> Provision, Monitor and Maintain cluster

Hadoop Ecosystem Components Diagram

The Hadoop framework includes the following core components:

DISTRIBUTED STORAGE

Several different pieces come together to enable distributed storage in Hadoop.

- HADOOP DISTRIBUTED FILE SYSTEM

In Hadoop, distributed storage is referred to as the Hadoop Distributed File System (HDFS). This system provides redundant storage and has the following characteristics:

- It's designed to reliably store data on commodity hardware.

- It's built to expect hardware failures.

- It is intended for large files and batch inserts. (Write once, read many times.)

There are two major components of Hadoop HDFS- NameNode and DataNode.

i. NameNode

It is also known as Master node. NameNode does not store actual data or dataset. NameNode stores Metadata i.e. number of blocks, their location, on which Rack, which Datanode the data is stored and other details. It consists of files and directories.

ii. DataNode

It is also known as Slave. HDFS Datanode is responsible for storing actual data in HDFS. Datanode performs read and write operation as per the request of the clients. Replica block of Datanode consists of 2 files on the file system. The first file is for data and second file is for recording the block's metadata. HDFS Metadata includes checksums for data. At startup, each Datanode connects to its corresponding Namenode and does handshaking. Verification of namespace ID and software version of DataNode take place by handshaking. At the time of mismatch found, DataNode goes down automatically.

- HBASE

HBase is a distributed, column-oriented NoSQL database. HBase uses HDFS for its underlying storage and supports both batch-style computations using MapReduce and point queries (random reads).

HBase also performs the following tasks:

- Stores large data volumes (up to billions of rows) atop clusters of commodity hardware.

- Bulk stores logs, documents, real-time activity feeds, and raw imported data.

- Consistently performs reads and writes to data that Hadoop applications use.

- Enables the data store to be aggregated or processed using MapReduce functionality.

- Offers a data platform for analytics and machine learning.

- HCATALOG

HCatalog is a table and storage management layer for Hadoop that enables Hadoop applications such as Pig™, MapReduce, and Hive™ to read and write data in a tabular format as opposed to the files.

It also offers the following features:

- A centralized location for storing data that Hadoop applications use.

- A reusable data store for sequenced and iterated Hadoop processes.

- Data storage in a relational abstraction.

- Metadata management.

DISTRIBUTED PROCESSING

Hadoop relies on MapReduce and Yet Another Resource Negotiator (YARN) to enable distributed processing.

- MAPREDUCE

MapReduce is a distributed data processing model and execution environment that runs on large clusters of commodity machines. MapReduce enables you to create insights from the data you've stored. It uses the MapReduce algorithm to break down all operations into Map or Reduce functions.

MapReduce offers the following advantages:

- Aggregation (counting, sorting, and filtering) on large and disparate data sets.

- Scalable parallelism of Map or Reduce tasks.

- Distributed task execution.

Hadoop Ecosystem Overview – Hadoop MapReduce

- YARN

YARN is the cluster and resource management layer for the Apache Hadoop ecosystem. It's one of the main features in the second generation of the Hadoop framework.

YARN offers the following functionality:

- It schedules applications to prioritize tasks and maintains big data analytics systems.

- As one part of a greater architecture, YARN aggregates and sorts data to conduct specific queries for data retrieval.

- It helps allocate resources to particular applications and manages other kinds of resource monitoring tasks.

Apache Hadoop Ecosystem – Hadoop Yarn Diagram

WORKFLOW MONITORING AND SCHEDULING

- ZOOKEEPER

Apache Zookeeper is a centralized service and a Hadoop Ecosystem component for maintaining configuration information, naming, providing distributed synchronization, and providing group services. Zookeeper manages and coordinates a large cluster of machines.

Features of Zookeeper:

- Fast – Zookeeper is fast with workloads where reads to data are more common than writes. The ideal read/write ratio is 10:1.

- Ordered – Zookeeper maintains a record of all transactions.

- OOZIE

Oozie is Hadoop's system for managing jobs. This workflow scheduler runs the workflows for the dependent jobs. It enables users to create directed acyclic graphs (DAGs) of workflows that run parallel and sequential jobs in Hadoop.

Oozie is very flexible. You can easily start, stop, suspend, and re-run jobs. Oozie also makes it very easy to re-run failed workflows.

Oozie is scalable and can manage timely execution of thousands of workflows (each consisting of dozens of jobs) in a Hadoop cluster.

Developers can use Apache Pig for scripting in Hadoop. Scripting uses a SQL-based language and an execution environment for creating complex MapReduce transformations. While Pig is written in the Pig Latin coding language, it's translated into executable MapReduce jobs. Pig also enables users to create extended or user-defined functions (UDFs) using Java.

Pig also offers the following things:

- A scripting environment for executing Extract-Transform-Load (ETL) tasks and procedures on raw data in HDFS.

- A SQL-based language for creating and running complex Map Reduce functions.

- Data processing, stitching, and schematizing on large and disparate data sets.

- A high-level data flow language.

- A layer of abstraction that enables you to focus on data processing.

4. Hive

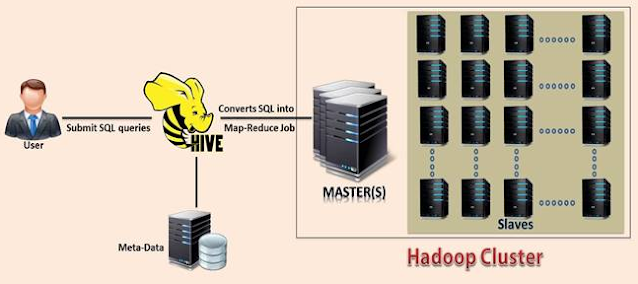

The Hadoop ecosystem component, Apache Hive, is an open source data warehouse system for querying and analyzing large datasets stored in Hadoop files. Hive do three main functions: data summarization, query, and analysis.

Hive use language called HiveQL (HQL), which is similar to SQL. HiveQL automatically translates SQL-like queries into MapReduce jobs which will execute on Hadoop.

Components of Hadoop Ecosystem – Hive Diagram

Main parts of Hive are:

· Metastore – It stores the metadata.

· Driver – Manage the lifecycle of a HiveQL statement.

· Query compiler – Compiles HiveQL into Directed Acyclic Graph (DAG).

· Hive server – Provide a thrift interface and JDBC/ODBC server.

No comments:

Write commentsPlease do not enter spam links