GCP AI Platform Model Deployment in Distributed machine

Instance Hands-On Lab

Objective:

We will go over the process of training a pre-packaged machine learning model on AI Platform, deploying the trained model, and running predictions against it using Distribute Machine.

Actions:

- We will focus on AI Platform Aspect not Tenser Flow

- We will use lot of command on command line/ gcloud usig cloud shell

Step Summary:

- Submit training job locally using AI-platform commands

- Submit training job on AI Platform, both single and distributed

- Deploy trained model, and submit predictions

Machine type:

- STANDARD_1

- 1 master

- 4 workers

- 3 parameter servers

- Trained on 4 machine with 3 parameters server

If you want a set of scripts to automate much of the process, the scripts and a PDF file of the commands we used can be found at the following public link:

If you want to download all scripts and files in a terminal environment, use the below command:

- gsutil cp gs://acg-gcp-course-exercise-scripts/data-engineer/ai-platform/\* .

(The period at the end is required to copy to your current location).

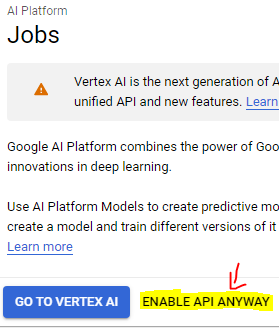

Step 1: Enable Google cloud API:

- Go to we console --> Go to Menu --> Search AI platform in Products --> Click on Jobs --> Enable API

- It will take a few mins to enable, you can also refresh page to check

Step 2: Prepare environment

- Go to cloud shell and execute commands

- ### Set region environment variable (i will run in US central 1 environent)

- REGION=us-central1

- ### Download and unzip ML github data for demo

- wget https://github.com/GoogleCloudPlatform/cloudml-samples/archive/master.zip

- unzip master.zip

Step 3: Setup Directory

- Check the files

- ls

- ### Navigate to the cloudml-samples-master > census > estimator directory.

- cd ~/cloudml-samples-main/census/estimator

- ### **Develop and validate trainer on local machine**

- ### Get training data from public GCS bucket

- mkdir data

- gsutil -m cp gs://cloud-samples-data/ml-engine/census/data/* data/

- ### Set path variables for local file paths, These will change later when we use AI Platform

- TRAIN_DATA=$(pwd)/data/adult.data.csv

- EVAL_DATA=$(pwd)/data/adult.test.csv

Step 4: Enable TFS version

- ### Run sample requirements.txt to ensure we're using same version of TF as sample

- sudo pip install -r ~/cloudml-samples-master/census/requirements.txt

Step 5: Specify output Directory and Clear it

- ### Specify output directory, set as variable

- MODEL_DIR=output

- ### Best practice is to delete contents of output directory in case

- ### data remains from previous training run

- rm -rf $MODEL_DIR/*

Step 6: Run a local trainer

- Runing local traini g env using API platform

- It will take ML model from from git hup repository and train it using our csv data

- It will train on 1000 data points and evaluate on 100 test data points, to check accuracy

- ### Run local training using gcloud

- Its going to training process in local session

- gcloud ai-platform local train --module-name trainer.task --package-path trainer/ --job-dir $MODEL_DIR -- --train-files $TRAIN_DATA --eval-files $EVAL_DATA --train-steps 1000 --eval-steps 100

- You can view result in visula format in product tersorboard dashboard

- tensorboard --logdir=$MODEL_DIR --port=8080

- In putput you will get url which you can directly use in chrome. it is a local viewer of accuracy and status to check progress and loss

you might face error due to issues with code and versions. Please fix in your system

Step 7: Execute trainer on Distributed Instance Instance

- To run trainer on GCP AI Platform - single instance

- ### Create regional Cloud Storage bucket used for all output and staging

- gsutil mb -l $REGION gs://$DEVSHELL_PROJECT_ID-aip-demo

- ### Upload training and test/eval data to bucket

- cd ~/cloudml-samples-master/census/estimator

- gsutil cp -r data gs://$DEVSHELL_PROJECT_ID-aip-demo/data

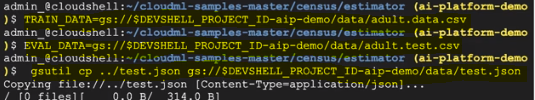

- ### Set data variables to point to storage bucket files

- TRAIN_DATA=gs://$DEVSHELL_PROJECT_ID-aip-demo/data/adult.data.csv

- EVAL_DATA=gs://$DEVSHELL_PROJECT_ID-aip-demo/data/adult.test.csv

- ### Copy test.json to storage bucket

- It will be used later for predictions

- gsutil cp ../test.json gs://$DEVSHELL_PROJECT_ID-aip-demo/data/test.json

- ### Set TEST\_JSON to point to the same storage bucket file

- TEST_JSON=gs://$DEVSHELL_PROJECT_ID-aip-demo/data/test.json

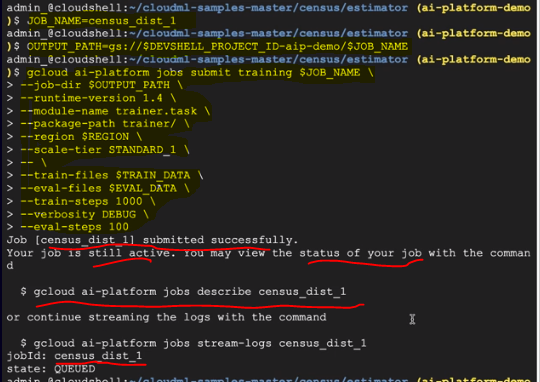

Step 8: Setup Jobs

- ### Set variables for job name and output path

- JOB_NAME=census_dist_1

- OUTPUT_PATH=gs://$DEVSHELL_PROJECT_ID-aip-demo/$JOB_NAME

- ### Submit a single process job to AI Platform

- ### Job name is JOB\_NAME (census\_dist\_1)

- ### Output path is our Cloud storage bucket/job\_name

- ### Training and evaluation/test data is in our Cloud Storage bucket

--job-dir $OUTPUT_PATH \

--runtime-version 1.15 \

--module-name trainer.task \

--package-path trainer/ \

--region $REGION \

--scale-tier STANDARD_1 \

-- \

--train-files $TRAIN_DATA \

--eval-files $EVAL_DATA \

--train-steps 1000 \

--verbosity DEBUG \

--eval-steps 100

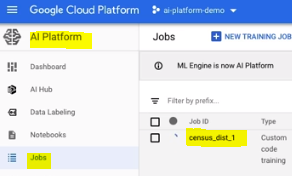

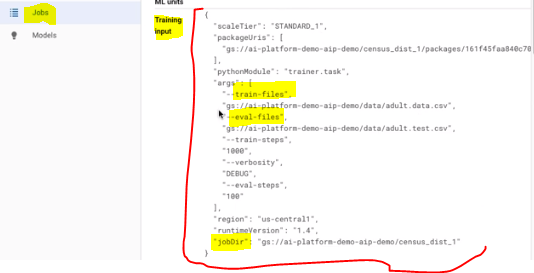

Step 9: View in AI Platform

- ### Can view streaming logs/output with gcloud ai-platform jobs stream-logs $JOB\_NAME

- ### When complete, inspect output path with gsutil ls -r $OUTPUT\_PATH

We can verify training Input from AI Platform and checking output file

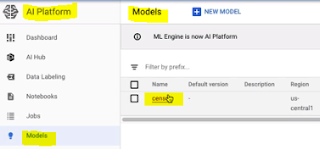

Step 9: Prediction Phase

- ### Deploy a model for prediction, setting variables in the process

- cd ~/cloudml-samples-master/census/estimator

- MODEL_NAME=census

- ### Create the ML Engine model

- gcloud ai-platform models create $MODEL_NAME --regions=$REGION

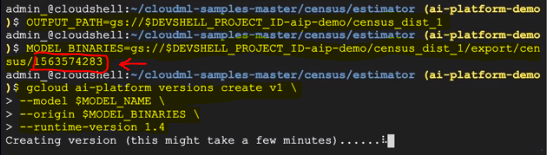

Step 10: Set the job output we want to use and create model version

- ###This example uses census\_dist\_1

- OUTPUT_PATH=gs://$DEVSHELL_PROJECT_ID-aip-demo/census_dist_1`

- ##### CHANGE census\_dist\_1 to use a different output from previous

- ## IMPORTANT - Look up and set full path for export trained model binaries

- ### gsutil ls -r $OUTPUT\_PATH/export

- ### Look for directory $OUTPUT\_PATH/export/census/ and copy/paste timestamp value (without colon) into the below command

- MODEL_BINARIES=gs://$DEVSHELL_PROJECT_ID-aip-demo/census_dist_1/export/census/<timestamp>` ###CHANGE ME!

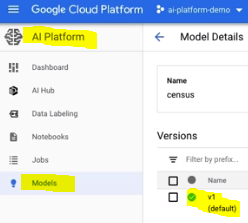

- ### Create version 1 of your model

--model $MODEL_NAME \

--origin $MODEL_BINARIES \

--runtime-version 1.15

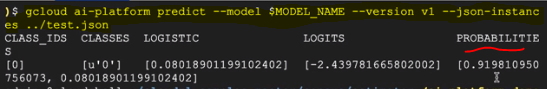

Step 11: Generate online prediction first

- ### Send an online prediction request to our deployed model using test.json file

- ### Results come back with a direct response

gcloud ai-platform predict \

--model $MODEL_NAME \

--version v1 \

--json-instances \

../test.json

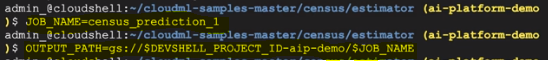

Step 12: Send a batch prediction job using same test.json file

- ### Results are exported to a Cloud Storage bucket location

- ### Set job name and output path variables

- JOB_NAME=census_prediction_2

- OUTPUT_PATH=gs://$DEVSHELL_PROJECT_ID-aip-demo/$JOB_NAME

- ### Submit the prediction job

gcloud ai-platform jobs submit prediction $JOB_NAME \

--model $MODEL_NAME \

--version v1 \

--data-format TEXT \

--region $REGION \

--input-paths $TEST_JSON \

--output-path $OUTPUT_PATH/predictions

###

Step 13: View prediction results in web console at

gs://$DEVSHELL_PROJECT_ID-aip-demo/$JOB_NAME/predictions/`

No comments:

Write commentsPlease do not enter spam links